Finagle

Table of Contents

- https://github.com/twitter/finagle

- http://twitter.github.io/finagle/

- Scala School上的例子

- Your Server As A Function

1. User's Guide

1.1. Quickstart

1.2. Concurrent Programming with Futures

- Finagle uses futures to encapsulate and compose concurrent operations such as network RPCs. Futures are directly analogous to threads — they provide independent and overlapping threads of control — and can be thought of as featherweight threads. They are cheap in construction, so the economies of traditional threads do not apply. It is no problem to maintain millions of outstanding concurrent operations when they are represented by futures.

- Futures also decouple Finagle from the operating system and runtime thread schedulers. This is used in important ways; for example, Finagle uses thread biasing to reduce context switching costs.

- Common examples of operations that are represented by Futures are:

- an RPC to a remote host

- a long computation in another thread

- reading from disk

- A Future[T], then, occupies exactly one of three states:

- Empty (pending)

- Succeeded (with a result of type T) / callback with onSuccess

- Failed (with a Throwable) / callback with onFailure

- Note that these operations are all fallible: remote hosts could crash, computations might throw an exception, disks could fail, etc. etc.

- Composition

- Sequential composition / flatMap

- Concurrent composition / collect

- Recovering from failure / onRescue

1.3. Services & Filters

- Services and filters consitute the core abstractions with which clients and servers are constructed with the Finagle network library. They are very simple, but also quite versatile. Most of Finagle’s internals are structured around Services and Filters.

- Services

- trait Service[Req, Rep] extends (Req => Future[Rep])

- Services are used to represent both clients and servers. An instance of a service is used through a client; a server implements a Service.

- Services implement application logic.

- Filters

- It is often useful to define application-agnostic behavior as well.

- A common example of this is to implement timeouts: if a request fails to complete within a certain time, the timeout mechanism fails it with a timeout exception.

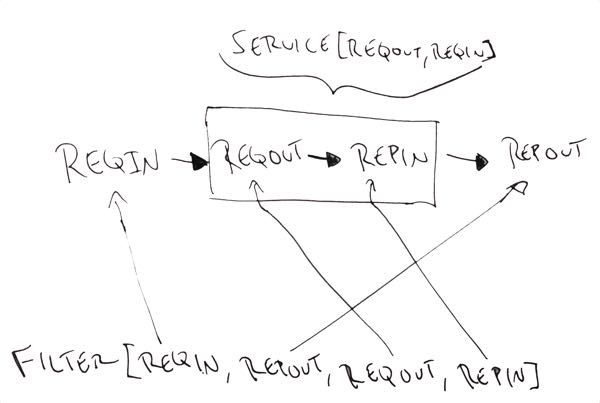

- abstract class Filter[-ReqIn, +RepOut, +ReqOut, -RepIn] extends ((ReqIn, Service[ReqOut, RepIn]) => Future[RepOut])

- trait SimpleFilter[Req, Rep] extends Filter[Req, Rep, Req, Rep]

- Composing filters and services / andThen

- It is often useful to define application-agnostic behavior as well.

1.4. Anatomy of a Server

1.5. Anatomy of a Client

1.6. Client Stack

Client implementations are encouraged to provide sensible defaults and leave room for application specific behavior to be built on top of the base layer via filters or synchronization mechanisms.

- Load Balancing

- The heap balancer maintains a collection of hosts, each represented by a ServiceFactory, and equally distributes requests over the hosts.

- The default balancing strategy is to pick the host with the least number of outstanding requests, which is similar to a least connections strategy in other load balancers.

- Additionally, the load balancer deliberately introduces jitter to avoid synchronicity (and thundering herds) in a distributed system and to ensure even balancing when request concurrency is low.

- Connection Pooling

- Finagle provides a generic pool that maintains a collection of service instances.

- Each endpoint the client connects to has an independent pool with high and low watermarks.

- The WatermarkPool keeps persistent services up to the lower bound. It will keep making new services up to upper bound if you checkout more than lower bound services, but when you release those services above the lower bound, it immediately tries to close them.

- This, however, creates a lot of connection churn if your application consistantly requires more than lower bound connections.

- #note: 按照上面的连接池管理逻辑,那么链接会被频繁创建和销毁

- As a result, there is a separate facility for caching, with some TTL, services above the lower bound. The CachingPool caches regardless of whether there are more than lower-bound open services; it’s always caching up to (upper-bound - lower-bound) services. The cache reaches its peak value when you reach your peak concurrency (i.e. “load”), and then slowly decays, based on the TTL. #note: 针对上面机制的补充,将这部分链接缓存起来,然后使用TTL信息来缓慢销毁

- The DefaultClient layers both pools which amounts to maintaining the low watermark (as long as request concurrency exists), queueing requests above the high watermark, and applying a TTL for services that are between [low, high].

- Finagle provides a generic pool that maintains a collection of service instances.

- Fail Fast

- The FailFastFactory attempts to reduce the amount of requests dispatched to endpoints that are likely to fail. #note: 后台检查链接健康状况

- It works by marking downed hosts when a connection fails, and launching a background process that repeatedly attempts to reconnect with a given backoff schedule.

- During the time that a host is marked down, the factory is marked unavailable (and thus the load balancer above it will avoid its use).

- The factory becomes available again on success or when the backoff schedule runs out.

- Timeouts

- The DefaultClient exposes parameters that enforce timeouts at varying levels of the client stack:

- maxIdletime - The maximum time for which any Service is permitted to be idle.

- maxLifetime - The maximum lifetime for any Service.

- serviceTimeout - The maximum amount of time allowed for acquiring a Service.

- By default these are disabled and DefaultClient implementations should only enforced them with care.

- The DefaultClient exposes parameters that enforce timeouts at varying levels of the client stack:

- Retries

- The filter can be configured either to retry a specific number of times or to adhere to a backoff strategy.

1.7. Metrics

| closechan | This counter is incremented every time a connection is closed. Timed out connections are closed. By default an idle connection times out in 5 seconds (hostConnectionIdleTime). |

| connection_duration | A stat representing the distribution of the duration of a connection. closechan and connection_duration.count must be equal. |

| connection_received_bytes | bytes received per connection |

| connection_requests | Number of requests per connection, observed after it closes. |

| connection_sent_bytes | Bytes sent per connection |

| connections | The current number of connections between client and server. |

| handletime_us | The walltime elapsed while handling a request. |

| requests | The number of requests dispatched. |

| pending | Number of pending requests (i.e. requests without responses). |

| request_latency_ms | The time from the beginning of a request until the response is received |

- Connection Pool Stats

- pool_cached - represents the number of cached tcp connections to a particular host.

- pool_size - represents the number of connections open to the host.

- pool_waiters - the number of requests that are queued while waiting for a connection.

- Load Balancer Stats

- size - represents the current number of nodes used by the balancer.

- adds - the cumulative node additions over the life time of the client.

- removes - the cumulative node removals over the life time of the client.